Breaking the law seems like a rational act for AI startups, and what to do about it at the AI summit

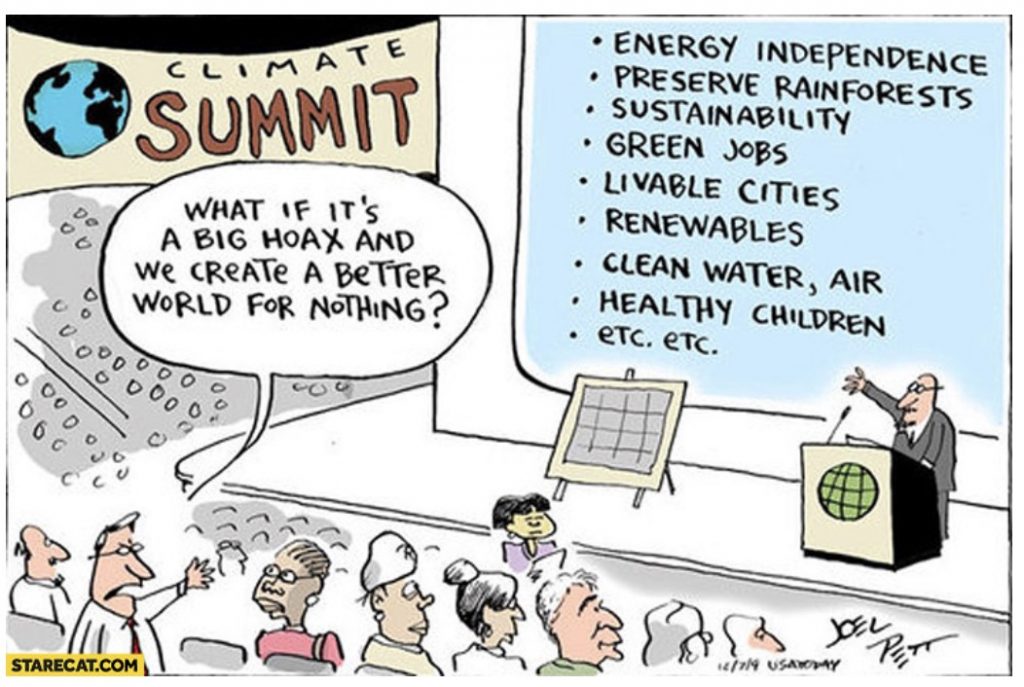

“National security” scale risks are the topic of the “AI Safety Summit”, which is the sort of thing you want governments to spend their time reducing. I’m glad there’s a summit on a potential catastrophe, as we should do more about all catastrophic risks… so how’s climate going?

Some in civil society argue that catastrophic harms are not the best topic for a summit, and they may even be right, but it is the summit it is. That the summit organisers cited the power of health chatbots as the epitome of civil society (p17) suggests someone listened too much to the bankrupt company Babylon rather than those who were proven right. The cash for scrutiny of health chat bots in civil society is probably less than the summit will spend on snacks and nibbles, and certainly less than the well funded tech fringe will spend on refreshments (although maybe it’s more than Babylon Health PLC’s current market cap). Show me your budgets, and I’ll see your priorities.

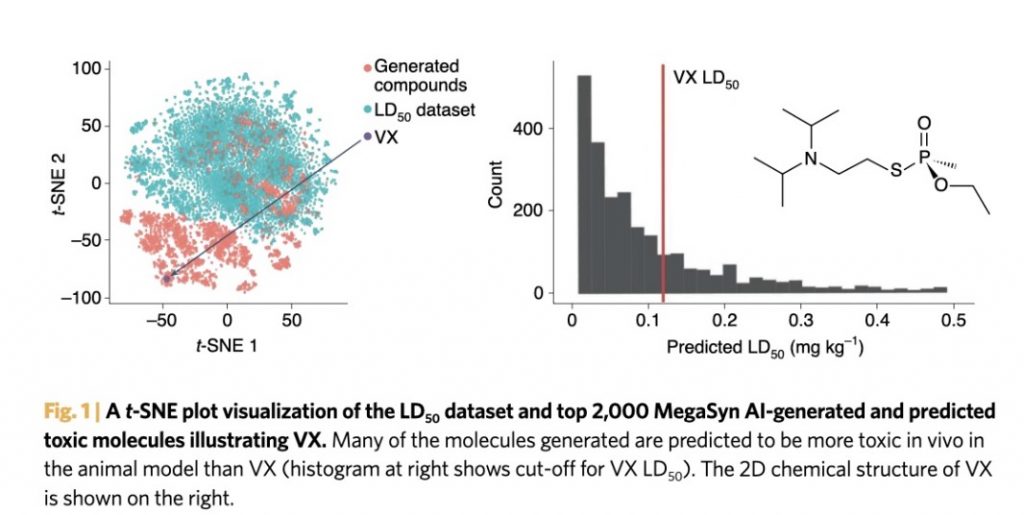

AI has been used to model every chemical compound possible which helps discovery of drugs. It also found sarin, VX, and a couple of other new nasties open source science was not aware of. Hopefully someone is working on treatments, and we can use science and engineering to minimise harms worldwide. It’s cheaper to destroy than create. If the AI advocates want to mitigate xrisk from such chemical AIs, a widespread response plan and capabilities for all toxins is needed to prevent, prepare, and respond.

Things can change rapidly when there’s innovation and need, which can be good news, or bad news, depending on the goals and the integrity of the actors.

This is a short canter through some harms of today, and what my experience might offer as scenarios for planning, and maybe offer a little hope

The Sams

The only difference between an innovator and a criminal is the outcome.

Unfortunately, outcomes are only known at the end.

Zuck was seen as a saviour before being seen as a tyrant, and the metaverse is still to come. Several Sams are on a journey, one Sam is in court, Altman is on an incline; and I’m here.

Laws and rules don’t help, as every culture that wants to be “responsible” will get gamed for private benefit. It’s true of rules and teenagers, and it’s how Uber came to London with the same game plan they ran everywhere else. I think Silicon Valley calls it blitzscaling; welcome to London.

A blood test for cancer has been impossible until it’s now reasonable; Theranos was a fraud 10 years ago, it may be possible in a decade.

Outcomes are the only difference between innovators and criminals, and criminals lie about their outcomes.

Public lies for private gain

Those who want to game the system are doing their own thing for their own private gain.

Time and time again, whether a journalist wanting to believe that Satoshi Nakamoto was giving him a story, or the tales of Theranos, Babylon, FTX, or whoever else, we see that many well meaning but under informed commentators get be taken in by a nice story, never recognising that the dishonest can move faster than the honest, because bullshit is cheap and easy. Dishonesty doesn’t have to be consistent, and the honest will wrestle with uncertainty.

Cognitive dissonance

Innovators don’t know what they don’t know.

I was once asked to a meeting in Google Deepmind’s offices, organised by email through a google.com email address, to tell me something wasn’t about Google. The companies are as bad as the criminals – it’s not that they intended to be dishonest, it’s that no tyrant ever failed to justify their crimes.

It’s easier to steal a dataset to figure out if something novel works for the first time, than it is to justify resources to get approval for a novel idea you can’t explain yet. If we know what we’re doing, it’s not research and it’s not innovation.

If you know it’s possible, if you can explain the roadmap to satisfy someone whose job it is to be sceptical (and by that I mean (HM) Treasury) then it’s not innovation.

When proponents say no to all reasonable critiques, and refuse to consider divergent views, then blind faith dominates and goes wrong. In UK health data, sometimes the lesson taken from a data grab fiasco is to grab harder, largely because “data science” priorities are narrow, parochial, and batshit insane. That a wider RECOVERY trial and OpenSAFELY do not have a clear path to governance and continuation is, quite frankly, as indefensible as the drunken walk that is the path to delivery for some physical infrastructure. We do the right thing after funding many attempts at the wrong thing. The health data situation is covered in the Goldacre Review, which notes the problem for the funding arrangements for infrastructure, which is as true for data infrastructure as it is, well, was, for HS2.

Some may argue that an argument for infrastructure, or an innovation, isn’t tractable, but those who really care about the topic will find a way solely because they care about the topic enough not to listen to anyone who disagrees. This is where the effective altruists go wrong.

For AI startups, breaking the law is a rational act

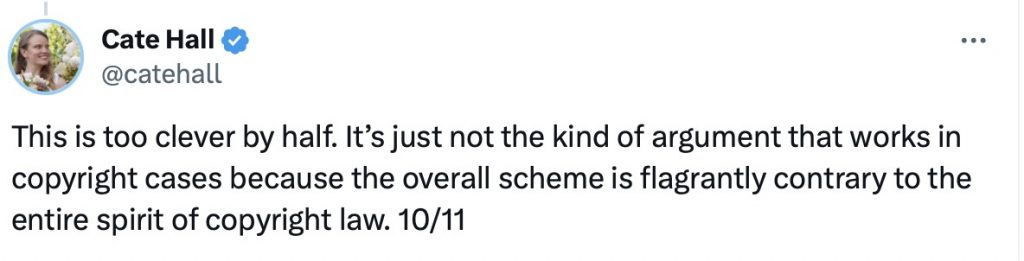

Cate’s legal analysis of ChatGPT law raises multiple questions for which law and rules aren’t the right approach.

It’s a rational act for tech startups to steal data. That it might blow the company up in 5 years is something that is five years away. A company focussing on narrow OKRs sees 5 years the same way Trump saw climate change in a decade – not something that matters to them.

Without a change in incentives, ever faster and more AI will incentivise this narrow focus ever more.

If you’re starting an innovative project, it is by definition something that you don’t yet know how to do; things you can’t show to work. You have to measure outcomes at the end.

Even if you could get the meetings to use the data legally, you can’t answer many legitimate questions. The answers simply don’t exist yet – because it’s innovation and you need the data to do the work. Moving fast and breaking the law seems like a rational act.

If you can’t use the data legally, you either give up, or take what you can get.

Facebook had their deceptive VPN tool which told them to buy instagram and whatsapp; AlphaGo was built off KGS and just pulled moves out of the database like a stochastic bag of moves; ChatGPT used stolen books; orth.ai stole a hospital of data. It’s not just AI, spotify started with stolen songs.

When Spotify made it big, almost everyone made money; everyone apart from the artists.

Kashmir Hill has written a great book on Clearview AI, which grabs faces off the internet and offers a facematching app to law enforcement in the US, and who knows who else. Tory ministers want it to legalise use in shops here. Zuck started by stealing womens photos to compare them with farm animals. When AI visionaries say humans will be fine, they mean that in the sense harvard women were?

For any innovation, if it’s clear that it’ll work, it’s not innovative. By definition, innovation is what we don’t know how to do yet, and there’ll always be unanswered questions and unanswerable questions. And people who most excel at those things are people who, to quote an ad, aren’t fond of rules.

They’ll use AI too.

VCs may want us to believe that these new innovators will act well, but there are no reasons to expect them to act better than Babylon, Theranos, orth.ai, Ionica, Autonomy, etc.

Money is a powerful incentive, because if something works and you find a business model, you can buy out past crimes, at least for a while. How many fringe events around the summit will be around a place which used to have a venue named “Sackler”?

Procurement rules should require disclosure of the data used to train AIs – if you can’t prove it, you can’t sell it. Stop the route to revenues, and you can encourage good behaviour.

Independent transparency, and competitive markets are necessary to both support and reward legitimate and rule compliant innovation, both of which mean no monopolies, or in American speak, strong antitrust enforcement. Hi google. Yes, following the rules makes innovation harder, but the goal isn’t to make AI easier, the goal is to make it survive contact with reality without lying to people. We will never stop the stochastic parrots lying to us until we stop lying to ourselves and lying to each other. OpenAI are at a strategic disadvantage.

If having all the data and giving it to a company with a fake website would cure cancer, the various large projects who have already done that would have something to show for it. None of them do.

If you rely on the good chaps theory of data governance, without good clear rules applied equally, the first people to show up are scammers. Whether that’s a “causes of cancer” study from a tobacco company, the pharmacy selling names and addresses of the cognitively impaired to convicted lottery fraudsters, hospital data for insurers to calculate likelihood of dying for premiums, or the genetic data bank handing patient data to longevity startups, the first most interested people are always the crooks that poison the well for everyone; legitimate businesses take time. [[crypto??]]

Business models

The Department of Health has been trying for years to develop some guidance for “business models” with NHS data. They are nowhere. Absolutely nowhere. Like some aspects of the AI summit, it has focused on starts, not ends, and processes, not outcomes.

We wrote this explainer in 2019, and while there are subsequent examples of bad practice, it fully still applies.

The only business model that works for the public sector is minimising costs overall to the public sector. Which counts externalities and doesn’t simply move money from one public sector body organisation to another.

Rather than speculating about business models, NHS bodies should expect commodity pricing for all innovations built off any NHS data. There is no monopoly on health data, so any monopolies on health AIs are purely by choice. In health data, monopolies can always be broken.

Individuals and Companies can choose who to not to buy from

It can be hard to tell the difference between bullshit and breakthrough in AI, but then, you can’t tell the difference in other innovation either. Recognition of progress has to come from evidence and science, not hype and froth.

What everyone can do is say clearly, that reputable people and organisations don’t buy AIs from companies, and wont sell services to companies, where it’s not clearly shown that all the training data is legitimate. You might be able to steal every book in print and train your AI on it, but no legitimate entity should do do business with you.

Will we buy from crooks? or not?

Our track record isn’t good – business model choice was as true for spotify as it is for health AIs and AI risk.

I used to say that if we were realistic about risks we’d ban the sale of tobacco, and now I know why some in Government found the line funny; we’ll see how that gets implemented.

Since the summit is focussing on national security risk, let’s finish up there.

Real Risks

When there is enough concern, we do try to fix things. Eventually.

In the UK’s current omnicrisis, perhaps the fact that sewage from heathrow regularly gets dumped into a river allows for accessible cheap bio monitoring that is otherwise impossible; suboptimal in many ways, but opportunism and innovation can come from anywhere… Such monitoring can also apply to Parliament, looking also for the metabolites of illegal drugs, a project which might encourage a clear legal framework for biorisk monitoring, and be a way to move responsibility to Michael Gove’s department.

When money can be made, those who care most about the money are not incentivised to learn.

Deepmind draw a parallel between climate to AI that I opened this piece with. But are Google not the equivalent of Exxon: making money from making the problem worse, arguing “if we don’t make the money doing this, someone else will”?

Disruptive Proactivity

Disruptive Proactivity